Artificial intelligence (AI) is revolutionizing marketing practices, empowering companies with unprecedented insights into customers and personalized messaging. However, with these advancements come significant ethical considerations surrounding data privacy, transparency, and bias. As AI unlocks new marketing capabilities, companies must responsibly navigate these risks to uphold ethical standards and build trust with customers. By thoughtfully addressing data privacy, promoting transparency, and mitigating biases, marketers can harness the power of AI while safeguarding customer relationships and sustaining a competitive edge.

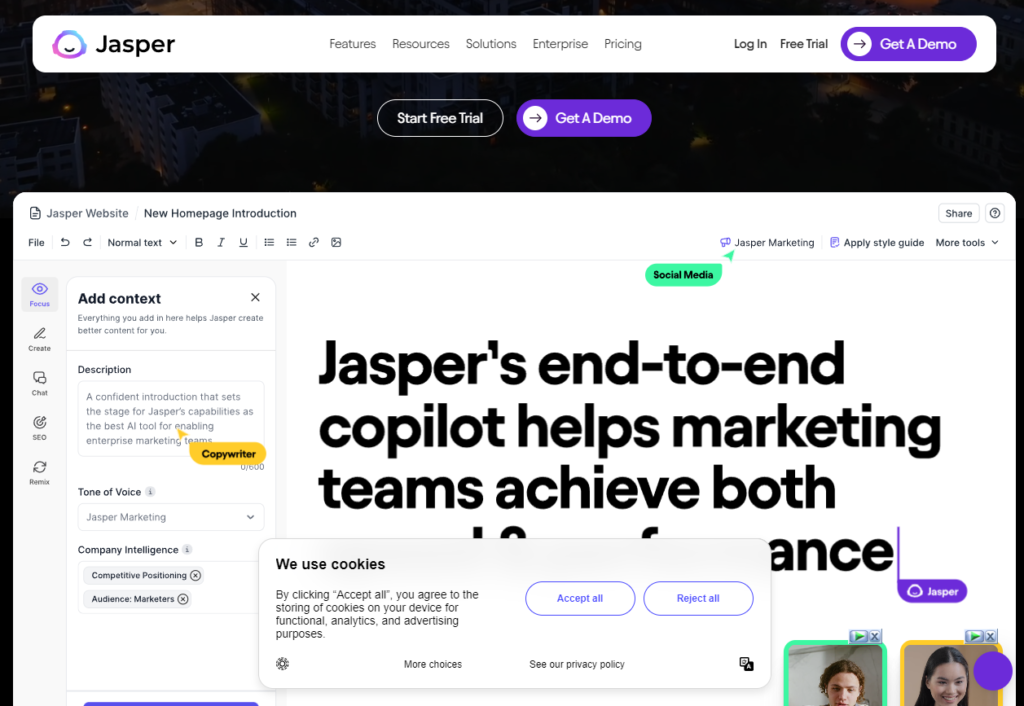

Megan Keaney Anderson, VP of marketing at Jasper.AI explains:

It’s really important when you adopt AI in your organization that you don’t just plug in the technology and say, ‘Go.’ There is an investment that we have to make as leaders in our companies to increase the AI literacy across your organization. Make sure that everybody knows, yes, about all the ways you can use AI, but also all the ethical questions, risks, and considerations that come with it. That is how you get to responsible AI usage, and those around the gamut. They include things like bias and inaccuracy. They include things like the environmental impact of large language models. They include things like making sure that you are not spreading misinformation by over-optimizing on AI. There’s a full spectrum of considerations that you need to learn about as a company as you adopt AI. But I think the biggest thing to remember here that will be a north star for all of this is: be careful what you outsource to AI. AI should be an assistant, not a replacement. If you have important decisions about where you spend your money, how you work with your customers, and what you are doing from a business strategy standpoint, consider AI and input, but not the answer. That answer, that decision should always sit with the strategists and the human in the loop.

Let’s begin by talking about the challenges around data privacy. Protecting customer data privacy is a critical ethical obligation for marketers leveraging AI. AI algorithms rely on access to customer data – the more data they can analyze, the better they can profile customers and personalize experiences. However, companies have a duty to collect, store, and use data transparently and responsibly.

Some specific data privacy considerations marketers need to be aware of include:

- Limiting data collection to information that’s necessary and relevant for delivering personalized experiences. Avoid collecting extraneous data just because it is available.

- Implementing robust cybersecurity measures to protect stored customer data from breaches. Encrypt data and limit internal access.

- Following data protection regulations such as the California Consumer Privacy Act and the EU’s General Data Protection Regulation (GDPR), which governs use of personal data.

- Providing customers with transparency and control over how their data is used. Enable them to opt out of data collection or delete their information.

Let’s talk a bit more about transparency. It’s important that AI marketing is transparent; companies must clearly communicate their use of AI technology to customers. Obscuring how AI is used can erode trust.

Alyssa Harvey Dawson, HubSpot’s chief legal officer, explains: Whether it is using AI or any other technology as part of a marketing campaign, marketers must make sure that they are respecting people’s personal data rights and using it in compliance with relevant laws and regulations and user expectations. This is not only necessary from a legal perspective but is also crucial to maintaining trust with your customers. Some best practices to follow include: be transparent with customers about how you use and collect their data; only use data that is necessary for that marketing campaign; make sure that any personal data that is being used is used within the bounds of applicable laws and your customer’s expectations; educate your marketing and customer service teams about data privacy regulations and best practices; and finally, understand what your third-party AI tools are doing with the data that you input.

Establishing transparent communication doesn’t have to be complicated. Explain in clear language what AI capabilities the company uses to engage with customers and personalize their experience. Avoid vague tech jargon and disclose when a customer is interacting with an AI chatbot versus human staff. Deception erodes trust. You should also allow customers to ask questions and get clear explanations about the company’s AI practices. It’s important to enable human oversight.

As an example, HubSpot’s AI ethics framework is publicly available on our website. You can also find a link in our resources section.

Alongside data privacy and transparency, you need to be aware of the potential biases of AI.

Alyssa Harvey Dawson, chief legal officer, HubSpot: Mitigating biases in AI-driven insights or algorithms is crucial to ensure fairness and accuracy in marketing. Biases can arise from the data used to train AI models, as well as from the design and implementation of algorithms. So, it’s really important to do some of the following: Make sure that the training data used for AI models is diverse and representative of the target audience. Include data from various demographic groups, geographic regions, and customer segments. Also, be mindful of historical biases in data and actively work to correct them. And then, finally, fact-check your outputs, and continuously monitor the performance of AI-driven marketing campaigns and algorithms for bias.

AI algorithms can perpetuate and amplify existing biases if they are trained on biased datasets. Marketers must proactively identify and minimize biases.

Specific considerations around bias include:

- Auditing AI training datasets to identify possible demographic, gender, racial, or other biases – clean problematic data.

- Monitoring AI marketing campaigns for uneven impacts on specific customer segments.

- Establishing human oversight processes to review and correct AI systems for issues of bias.

Responsibly addressing ethics in AI strengthens customer trust and the integrity of marketing practices.

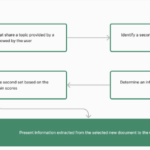

Let’s identify a few ways you can implement ethical AI marketing practices and gain a competitive advantage.

1. Data Collection and Use

- Conduct privacy impact assessments to identify potential risks in collecting and using customer data. Only gather necessary data.

- Create consent flows that clearly explain to customers how their data will be used and provide opt-out options. Honor opt-out requests promptly.

2.AI Software Selection and Testing

- Thoroughly vet AI software providers to make sure their models account for biases and discrimination issues. Select ethical providers.

- Test AI marketing software extensively before deployment to uncover potential biases or performance issues. Only deploy vetted software.

3.Monitoring and Auditing

- Build cross-functional teams including marketing, legal, and IT to continuously monitor marketing campaigns for compliance with internal ethics policies and external regulations. Maintain diligent oversight.

- Conduct scheduled audits of all AI marketing practices, including data collection, software selection, and campaign monitoring. Swiftly address identified issues.

- Provide transparency to customers, such as creating an ethics advisory board and publishing regular reports on ethical AI initiatives. Communicate openly and honestly.

Focusing on rigorous selection, testing, and monitoring of purchased AI software will enable marketers to use these tools responsibly. Ongoing auditing and transparency will uphold ethical standards.

Alyssa Harvey Dawson, chief legal officer, HubSpot: Using AI responsibly means continuously and diligently monitoring the use of AI tools. It means taking a proactive approach to identify and mitigate ethical risks. So, monitoring AI systems can really involve a number of elements. Many companies will identify key performance indicators, KPIs, and metrics that are relevant to ethical considerations and then measure against them. These may include fairness, bias, transparency, privacy, and accountability metrics. It is also important to monitor the data used for training, testing, and the ongoing operation of AI models, and then use those insights gained from monitoring evaluation to make continuous improvements to your AI systems and processes. Another important element of monitoring and evaluation of your AI tools is creating channels for users and customers to provide feedback on the AI’s related ethical concerns, and then importantly, promptly address that feedback so you can improve your AI systems.

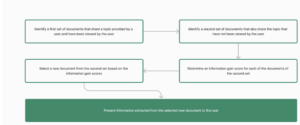

So what does this look like in practice? Let’s review two different scenarios.

Company A knew they needed to protect customer data privacy while leveraging AI for personalized marketing. They wanted to improve their customer retention and lifetime value through highly personalized marketing campaigns powered by AI. However, they knew this required collecting more customer data that could infringe on privacy. Company A decided to implement several ethical safeguards:

Allowing customers to explicitly opt-in or opt-out of data collection used for AI-driven personalization

- Anonymizing customer data used to train AI models by stripping out personally identifiable information

Building a secure data warehouse to store customer data separately from general company systems - Establishing an internal privacy review board to oversee data practices

With these controls in place, Company A felt confident rolling out AI-powered targeted campaigns while upholding strong privacy protections. Open communication and respecting customer preferences were key.

Company B knew they needed to transparently communicate AI use and mitigate potential biases in targeted advertising. They used AI to optimize digital ad targeting and bidding, but wanted to avoid inadvertently discriminatory practices. They implemented several ethical controls:

- Adding prominent notices on their website explaining how they were using AI for advertising purposes

- Establishing strict protocols to scrub training data and proactively test for biases in the AI models

- Instituting human oversight processes to review ad campaigns for fairness and actively monitor impact on different customer demographics

- Identifying measures taken to ensure responsible AI use in their sustainability reports

Through these efforts, Company B was able to harness the advantages of AI-powered ad targeting while maintaining high ethical standards and customer trust. Their example demonstrates that marketing innovation and ethics can be mutually compatible.

Unethical AI practices can inflict severe damage on businesses, including legal issues, financial losses, and reputation harm. Mishandling customer data or allowing algorithmic biases erodes trust, damages brand image, and leads to lost revenue and lawsuits. In today’s landscape where privacy and inclusion matter, companies must make ethical AI a priority. Implementing responsible data practices, transparency, and bias mitigation allows businesses to maintain moral standards, customer loyalty, legal compliance, and brand reputation. The potential dangers of unethical AI highlight the critical need for ethical AI standards that companies must follow.

Lately CEO,

Kate Bradley Chernis has another interesting perspective to consider. When it comes to ethics and AI, everyone is often focused on things like copyright infringement, which is obviously valid. But there’s another point of view: how humans feel about the AI and not feeling threatened, for example. And there’s this great metaphor that I love to share that shows how important it is for this collaboration to be part of the mix. It’s not only about ethics; I mean, that’s part of it. But it’s about seeing those results, right? That’s ultimately what we all care about. Betty Crocker, as you guys all know, makes great cake in a box. I love devil’s food cake; that’s my favorite. And when they first released it in the 1950s, all you had to do was add water. Now, the housewives at the time, whom they were marketing to, thought that was weird. They had no ownership in the process. They didn’t feel like they’d made or baked anything. So Betty Crocker took the powdered eggs out, and their tagline became “just add an egg,” and the sales soared, right? There’s a similar thing here. Well, of course, we all understand the ethics of stealing, right? Nobody wants to be caught stealing anybody’s content, for sure. But there’s another key component here, which is literally the emotional attachment, or detachment, from AI. And when you can put these things together, the AI and the human, when they can work and humans feel ownership in the product that the AI is generating, that’s when you’ll see not only great ethic policies but also great results.

The rise of AI in marketing brings huge opportunities to better understand and engage customers. However, this progress requires a strong commitment to ethics and responsible use. Respecting ethical considerations in AI marketing is not only right but also a competitive edge in a data-driven world. By embracing responsible AI use, marketers can harness its power while upholding the values customers expect.