Performing a technical SEO audit of a website is one of the core functions of an SEO professional. An audit helps

you assess the technical aspects of a website and identify places where you can optimize or make improvements

to the site’s performance, crawlability, and search visibility.

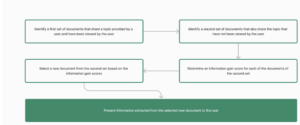

Depending on the type of website and its size, your approach to a technical audit may differ. However, you can

use the following framework as a starting point for any type of technical SEO audit.

First, make sure you have the necessary tools in place. The first tool at your disposal is free and comes from

Google itself: Google Search Console (or GSC for short). In addition to Google Search Console, you may want to

use a web scraping tool, like Screaming Frog, a dedicated SEO tool, like SEMrush, Moz, or Ahrefs, and analytics

tools, like Google Analytics or Nexorank. We won’t review these tools, since the tools you use depends on your

individual situation. Instead, we’ll focus on the freely available tools provided by Google.

To get started, break down your technical SEO audit into the following sections:

- Crawlability and indexation

- Duplicate content

- Site speed

- Mobile-friendliness

- Structured data

- JavaScript rendering

Let’s go through each stage in a little more detail. Remember, as you go through your audit, use the provided

checklist to track errors and fixes.

First, identify issues with crawlability and indexation. This step involves making sure that Google (and other search

engines) can properly crawl and index your website.

In Google Search Console, navigate to Settings and open your Crawl Stats report. Here, you’ll see a crawl

request trend report, host status details, and a crawl request breakdown.

You want to focus on ensuring you receive an “OK (200)” response, which means Google was able to successfully

crawl your page. For any pages that didn’t return a 200 response, try to determine why the crawl failed. For

example, why did a page return a “Not found (404)” response? Has it been deleted and therefore should be

removed from your sitemap?

Here’s a pro tip: Focus on pages that return a 400 or 500 status code — these are the codes to be concerned with

since they’re client and server error responses. Generally, you don’t need to worry about 300 redirect codes. They

just mean the URL you’re inspecting isn’t going to rank.

Next, check out the Coverage report in GSC.

Here, you’ll see the overall status of the indexed pages on your site,

along with a helpful list of indexation errors. Since Google wasn’t able to index these pages, they won’t appear in

Google search. In some cases, this is a good thing. For example, you may intend for a page, like a private

membership page, to not appear in search results by tagging it as “no-index.”

Be sure to review all the indexation errors in this report to identify which pages were meant to be excluded from

search and which were not. If you want to review a specific page in detail, you can use the URL Inspection tool.

Here, you can determine if a page has been changed and make a request to have it re-indexed.

Next, review your sitemaps.

There are two types of sitemaps: HTML and XML. It’s important to ensure all indexable

pages are submitted to Google in the XML sitemap. Check the Sitemap report in GSC to find out if your sitemap

has been submitted, when it was last read, and the status of the submission or crawl. You can learn more about

sitemaps in the video, Allowing Google to Index Your Pages, linked in the resources section.

The final step in identifying issues with crawability and indexation is to review your robots.txt file. The robots.txt file

allows you to exclude parts of your site from being crawled by search engine spiders. You can usually find it by

going to yourwebsite.com/robots.txt. You can also use Google’s free robots.txt testing tool, linked in the

resources.

Make sure your robots.txt file points search engine bots away from private folders, keeps bots from overwhelming

server resources, and specifies the location of your sitemap.

Depending on what you find during this stage, you’ll likely need to work with your developer or system

administrator to resolve some of the issues as they relate to the sitemap and robots.txt file.

Now, it’s time to identify any duplicate content issues on your site. Duplicate content means there’s similar or

identical information appearing on different pages of your site. This can confuse search engine crawlers, leading

to pages performing poorly in search results.

A site typically suffers from duplicate content when there are several versions of the same URL. For example, your

website may have an HTTP version, an HTTPS version, a www version, and a non-www version. To fix this issue, be

sure to add canonical tags to duplicate pages and set up 301 redirects from your HTTP pages to your HTTPS

pages.

Canonical tags tell search engines to crawl the page containing the primary version of your content. This is the

“master page” — the page you want to point search engines to crawl, index, and rank. You may need to work with a

developer to implement canonical tags and redirect your HTTP site to your HTTPS site.

The next stage involves auditing the speed of your website. Site speed directly impacts the user experience and

your ability to rank in search results. There are two elements to examine here: page speed, the speed at which

individual pages load, and site speed, the average page speed for a sample group of pages on a site.

Improve your page speed, and your site speed will increase as well.

Start by running your pages through Google’s PageSpeed Insights tool or Nexorank’s Website Grader. Either will

generate a speed report and identify elements that may be slowing your page.

There are several common fixes to optimize page speed, like compressing images, minifying code files, using

browser caching, minimizing HTTP requests, and using a content delivery network (CDN).

You may need to work with a developer to implement these fixes as well. To learn more about optimizing site

performance, check out the video, Reducing Page Size and Increasing Load Speed.

Ensuring your site is mobile-friendly is next. This determines how well your website performs on mobile devices.

Did you know that over 50% of all web traffic comes from mobile?

That means that over half of the people who visit your site are viewing it on a mobile device. This is why ensuring that your website is mobile-friendly is so

critical. If you ignore mobile, you’re essentially disregarding over half of your overall traffic.

Start by running your pages through Google’s Mobile Friendly Test which is linked in the resources section. This

test will show you whether your page is mobile-friendly. You can also view mobile-friendliness stats for your entire

website in Google Search Console’s Mobile Usability Report. Below this report, you’ll see specific mobile

usability errors that you can resolve to make your website more mobile-friendly.

The most common issues with mobile sites are caused by not using a responsive website design, the mobile and

desktop versions of the site not matching up, and the user experience on mobile being poor.

Out of these, responsive web design is the most important aspect of having a mobile-friendly website. Responsive

design automatically adjusts the contents of a page depending on the size of the viewer’s screen. This ensures

that all content is easily viewable no matter the device someone is using. To learn more about responsive design,

check out the lesson on Optimizing a Website for Mobile.

Having your mobile site match up with your desktop site is also important. Why?

Well, Google started employing

“mobile-first” indexing in 2021, meaning they’ll crawl your mobile site over your desktop site. This means that if

content is missing from the mobile version of your site, it may not get indexed as it would on desktop.

Make sure this doesn’t happen by checking that these elements are the same across mobile and desktop:

- Meta robots tags (e.g. index, nofollow)

- Title tags

- Heading tags

- Page content

- Images

- Links

- Structured Data

- Robots.txt access

Both responsive design and consistency across mobile and desktop naturally create a better user experience. But

be sure to also check that there’s nothing interfering with people’s ability to navigate your site on mobile devices,

like intrusive pop-ups.

Next, let’s review how to audit your structured data.

According to Google, “Structured data is a standardized format for providing information about a page and

classifying the page content. For example, a recipe page may include a structured format for the ingredients,

cooking time and temperature, and calories.

Google uses this structured data to better understand the contents of a page and potentially display it in rich

snippets in the SERPs. Simply having structured data markup on your page doesn’t guarantee that Google will

display a rich result for your site, but it gives you a chance to get featured.

If you have existing structured data on your site, make sure it’s displaying properly by running it through Google’s

Structured Data Testing Tool and their Rich Results Test. Spotting errors in your website’s code requires

practice, especially if you’re not a regular web developer. Whenever you see errors flagged in one of your testing

tools, check the code and try to identify what went wrong.

According to SEMrush, “Diagnosing technical SEO issues goes much faster and is far less frustrating when your

code is clean and correct.” This most likely means you’ll have to work with your web developer to fix or implement

the structured data on your site.

Finally, let’s review JavaScript rendering.

Normally, a search engine spider crawls a web page and then passes it to the indexing stage. But for websites with

content added by JavaScript, there’s an extra stage between crawling and indexing: the rendering stage.

While Googlebot can run most JavaScript, it’s not always guaranteed. You need to make sure that Google can see

and render any JavaScript-added content on your site during your technical audit.

So, how do you do this? Use the URL Inspection Tool in Google Search Console to plug in your JavaScript page.

This will display a screenshot of how Google sees your page, which will determine whether they’re rendering your

JavaScript. You can also view loaded resources, JavaScript output, and more information that can help you and

your web developer debug any issues.

This is mostly a concern for sites with SPAs (single-page applications), or sites that have custom content elements

added via JavaScript. You’ll need to work with your web developer to address any issues with JavaScript

rendering.

We’ve reached the end of your technical SEO audit!

Be sure to use the checklist to track any issues you find and to take note of fixes you need implemented.

While we’ve covered several different categories that you can include in a comprehensive technical SEO audit, this

list isn’t exhaustive. There are other pieces of site information that you may want to consider depending on the

business and the type of website you’re auditing, such as different language versions on international sites or

pagination for ecommerce sites.

You may also want to pair a technical SEO audit with a content audit by reviewing all of the on-page elements, like

content, keywords, and images, and a backlink audit to assess the site’s off-page SEO performance.